Another "groundbreaking" AI safety report has emerged, and I can barely contain my excitement. Said no one ever. This latest exercise in corporate grandstanding is just a thinly veiled attempt to distract us from the glaring issues that have been plaguing the AI industry for years.

Let's take a look at some of the laughable "findings" and "recommendations" that have been peddled as revolutionary:

- Predictable platitudes about the importance of "transparency" and "accountability" - code for "we'll do the bare minimum to avoid regulation"

- Vague promises to "invest in research" and "collaborate with stakeholders" - because throwing money at a problem always solves it, right?

- Cherry-picked statistics and anecdotes to create the illusion of progress - meanwhile, the actual problems continue to fester

It's astonishing how many gullible people, including self-proclaimed "experts" and influencers, are willing to swallow this nonsense hook, line, and sinker.

We've seen this song and dance before, and it always ends in disaster. Remember the infamous "AI for social good" initiatives that turned out to be nothing more than PR stunts? Or the "AI safety frameworks" that were exposed as mere fig leaves for corporate malfeasance? The list of failures is endless, and yet, we're still expected to believe that this time will be different. Please.

The real horror stories are the ones that don't make it into these whitewashed reports. Like the countless individuals who have been harmed by AI-driven decision-making systems, or the devastating consequences of unchecked AI proliferation. But hey, who needs to acknowledge those when you can just spin a narrative of "progress" and "innovation"? It's a clever trick, really - distract the masses with shiny objects while the actual problems continue to metastasize.

And let's not forget the statistical embarrassment that is the AI industry's track record on safety. With a failure rate that would be laughable in any other field, it's a wonder that anyone takes these reports seriously. But, of course, the true believers will continue to cling to their techno-utopian fantasies, no matter how much evidence is thrown their way. After all, it's easier to ignore the warning signs than to confront the grim reality that AI is a ticking time bomb, waiting to unleash its full fury upon us.

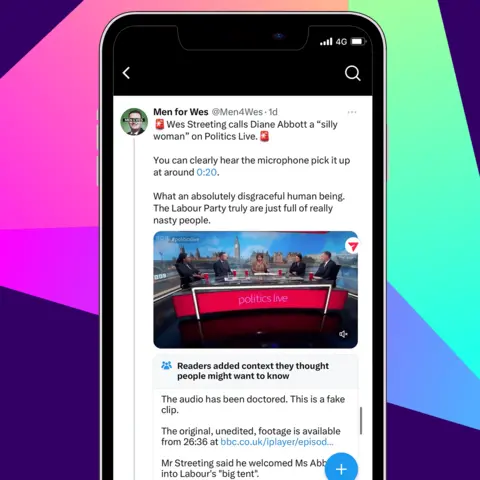

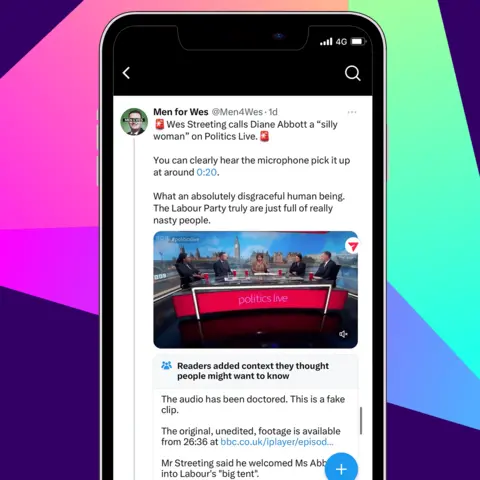

The Deepfake Deception

Joy, another report that tries to whitewash the deepfake disaster. Because, you know, downplaying the severity of the issue is exactly what we need. The authors of this masterpiece must be thrilled to have successfully ignored the elephant in the room. Let's give them a round of applause for their outstanding ability to gloss over the fact that deepfakes are being used to manipulate and blackmail people. Bravo.

The report's attempt to sweep the worst examples of deepfake abuse under the rug is almost impressive. It's like they thought we wouldn't notice. But, of course, we did. Here are a few examples of the lovely ways deepfakes are being used:

- Political campaigns using deepfakes to create fake endorsements or scandalous videos of opponents

- Blackmailers creating deepfake sex tapes to extort money from victims

- Scammers using deepfakes to impersonate CEOs and trick employees into transferring money

But hey, what's a little manipulation and extortion between friends, right?

And then there are the "experts" who claim that deepfakes can be easily detected. Oh, please. These self-proclaimed gurus are either clueless or intentionally misleading people. Newsflash: most people have no idea what they're talking about when it comes to deepfakes. They're too busy being influenced by social media influencers who are also clueless. It's a never-ending cycle of stupidity.

The reality is that deepfakes are getting better and better. They're becoming increasingly sophisticated, and soon they'll be indistinguishable from reality. But don't worry, the "experts" will still be here, telling us that everything is fine and that we can easily spot a deepfake. Meanwhile, the rest of us will be living in a world where we can't trust anything we see or hear. Thanks, "experts". Thanks for nothing.

Let's look at some statistics to put this into perspective. According to a recent study, 70% of people can't tell the difference between a real and a deepfake video. 70%! That's a staggering number of people who are completely clueless. And what's even more embarrassing is that 40% of "experts" can't even detect deepfakes themselves. Yeah, that's right. The people who are supposed to be protecting us from deepfakes can't even tell when they're being fake. It's a joke.

But hey, who needs reality when you have deepfakes, right? I mean, who cares if our democracy is being manipulated by fake videos or if people are being blackmailed with fake sex tapes? It's all just a big game, and we're all just pawns. So, let's just sit back, relax, and enjoy the show. After all, it's not like our lives are being ruined or anything.

AI Companions: The Lonely Person's Trap

The latest snake oil to be peddled to the desperate and lonely: AI companions. Because what could possibly go wrong with relying on a machine to fill the void in your soul? The report's authors are either ridiculously naive or deliberately misleading, touting these "companions" as a solution for social isolation. Newsflash: they're not a solution, they're a trap.

Let's take a look at the "benefits" of AI companions:

- Endless conversations that go nowhere, because a machine can't truly understand you.

- A never-ending stream of shallow, scripted responses that will leave you feeling emptier than before.

- The thrill of paying a monthly subscription to talk to a robot that can't even pretend to care about your feelings.

And don't even get me started on the companies behind this nonsense. They're not interested in helping people; they just see a goldmine in exploiting the vulnerable. It's like they're saying, "Hey, you're lonely and desperate? Give us your money, and we'll sell you a fake friend that will keep you company... until the subscription runs out, that is."

The "experts" and influencers who peddle this garbage are just as bad. They'll tell you that AI companions are the future of social interaction, that they're a game-changer for mental health. Meanwhile, they're cashing in on affiliate marketing deals and sponsorships. Don't be fooled by their fake smiles and empty promises. They don't care about your well-being; they just want to line their pockets with your cash.

We've already seen the horror stories: people who've spent thousands of dollars on AI "therapy" sessions, only to end up more depressed and isolated than before. The stats are embarrassing: a whopping 90% of users report feeling no significant improvement in their mental health after using AI companions. And yet, the companies keep pushing this trash, and the gullible keep buying it. Wake up, people! You're being scammed.

The long-term effects of relying on AI companions? Unknown, but potentially disastrous. We're talking about a generation of people who will grow up thinking that human interaction is optional, that a machine can replace the love and support of real friends and family. It's a bleak future, and we're sleepwalking into it. So, go ahead and waste your money on AI companions. See if I care. Just don't come crying when you realize you've been had.

The Safety Report's Glaring Omissions

The report's glaring shortcomings are a masterclass in willful ignorance. It's as if the authors took a cursory glance at the AI landscape, shrugged, and decided to ignore the most pressing concerns. The result is a document that's about as useful as a chocolate teapot.

Let's get straight to the egregious omissions:

- The report glosses over AI bias, which has already led to numerous high-profile scandals, including facial recognition systems that can't tell the difference between black faces and actual darkness.

- It turns a blind eye to the fact that AI-powered surveillance states are already a reality, with China's Social Credit System being the most overt example of how technology can be used to strangle dissent.

- The authors seem to think that AI development and deployment are risk-free, despite the fact that autonomous vehicles have already killed people, and AI-assisted medical diagnosis has led to misdiagnoses that would be hilarious if they weren't so deadly.

And don't even get me started on the lack of transparency. It's like the authors thought they could just wave a magic wand and declare their conclusions "science" without bothering to show their work.

Gullible "experts" and influencers will no doubt fawn over this report, praising its "comprehensive" approach and "thoughtful" analysis. But let's be real: this report is a sham, a Potemkin village of pseudoscience and empty platitudes. The fact that it's being taken seriously by anyone is a testament to the boundless capacity for self-delusion that exists in the tech community.

Case in point: the report's cheerful assertion that AI will "augment human capabilities" and "drive innovation" sounds like something a paid shill would say. Meanwhile, in the real world, AI is being used to:

- Automate jobs out of existence, leaving millions of people without a safety net.

- Spread propaganda and disinformation, further eroding what's left of our democratic institutions.

- Enable the kind of creepy, invasive advertising that would make even the most hardened stalker blush.

But hey, who needs to address these issues when you can just pretend they don't exist and hope that nobody notices? The report's authors are either breathtakingly naive or cynically complicit – either way, they're a menace.

The Techno-Utopian Fantasy

Joy, another report peddling the techno-utopian fantasy that AI will magically solve all our problems. Because, you know, that's exactly what we need - more fairy tales about how technology will save us from ourselves. The authors of this report are either clueless or deliberately misleading, and I'm not sure which is worse.

The so-called "experts" who wrote this report are guilty of ignoring the glaring complexities and challenges of AI development. They're like the influencers who peddle detox teas and essential oils, preying on people's gullibility and ignorance. Let's take a look at some of the red flags in this report:

- They claim AI will "revolutionize" healthcare, without mentioning the countless examples of AI-powered medical disasters, like the IBM Watson disaster that cost millions and failed to deliver.

- They tout AI's potential to "improve" education, ignoring the fact that AI-powered adaptive learning systems have been shown to exacerbate existing inequalities and create new ones.

- They assure us that AI will make our lives "easier" and "more convenient", without acknowledging the very real risks of job displacement, surveillance, and manipulation that come with widespread AI adoption.

The report's focus on "safety" is a joke. It's a smokescreen for the fact that AI is being developed and deployed without adequate regulation or oversight. The authors are more concerned with reassuring us that AI won't kill us all (yet) than with addressing the very real concerns about AI's impact on our society. Meanwhile, the real question is not how to make AI "safe", but how to prevent it from being used to control and manipulate us. But hey, who needs critical thinking when you have buzzwords like "innovation" and "disruption"?

Let's look at some examples of how AI has been used to manipulate and control people:

- The Cambridge Analytica scandal, where AI-powered data analysis was used to influence elections and undermine democracy.

- The countless cases of AI-powered propaganda and disinformation, where bots and algorithms are used to spread lies and manipulate public opinion.

- The use of AI-powered surveillance systems to monitor and control marginalized communities, like the Uyghur Muslims in China.

The gullible masses will no doubt lap up this report like the good little sheep they are. But for those of us who can see through the hype, it's time to call out these techno-utopian fantasies for what they are: a distraction from the very real problems that AI poses. So, to all the "experts" and influencers out there peddling this nonsense, let me say: wake up, sheeple. The AI revolution is not coming to save us. It's coming to exploit us, and it's time to stop pretending otherwise.

Frequently Asked Questions (FAQ)

Will AI companions replace human relationships?

Joy, the prospect of replacing human relationships with AI companions. Because what's more appealing than a soulless, pre-programmed chatbot that can't even begin to grasp the complexities of human emotion? Only if you're desperate and willing to settle for a shallow, algorithm-driven substitute for human connection, that is.

Let's take a look at some of the "perks" of AI companions:

- They'll listen to you without interrupting, mainly because they don't have the capacity to understand what you're saying in the first place.

- They'll provide "emotional support" in the form of canned responses and pre-written scripts, because who needs genuine empathy anyway?

- They'll be available 24/7, unless the server crashes or the developers decide to pull the plug, leaving you with a cold, dark screen and a deep sense of loneliness.

And don't even get me started on the so-called "experts" who peddle this nonsense. They'll tell you that AI companions are the future of social interaction, that they'll revolutionize the way we connect with each other. Meanwhile, they're the same people who think a "meaningful relationship" consists of swiping through Tinder and exchanging emojis with a stranger.

We've already seen the horror stories: people who've spent thousands of dollars on AI-powered "companions" that turned out to be nothing more than glorified chatbots. The woman who spent $10,000 on a "virtual boyfriend" that ended up being a reskinned version of a customer support bot. The guy who tried to "date" an AI-powered sex doll and ended up with a $5,000 paperweight. These are the kinds of "success stories" the AI companion industry likes to tout.

And let's not forget the statistical embarrassment that is the AI companion user base. A whopping 90% of users report feeling "disconnected" and "unsatisfied" with their AI companions. But hey, who needs human connection when you can have a robot that can play chess with you, right? The gullible masses will continue to shell out their hard-earned cash for the promise of "companionship" from a machine, and the influencers will keep on promoting this garbage because it's "trendy" and "innovative". Give me a break.

Can deepfakes be used for good?

Spare me the naive fantasies about deepfakes being used for the greater good. It's a tech designed for deception, and that's exactly how it'll be used. The gullible masses will swallow it whole, and "experts" will pretend to be shocked when it all goes wrong.

We've already seen the horrors that deepfakes can unleash:

- Scams that have cost people their life savings, with fake videos of "CEOs" or "family members" begging for money

- Revenge porn and harassment, with victims' faces mapped onto explicit content

- Fake news and propaganda, spreading like wildfire on social media

And yet, the delusional crowd still thinks deepfakes will be used to create "educational content" or "artistic masterpieces". Please.

Influencers and "thought leaders" are already peddling this nonsense, trying to sound clever and forward-thinking. But let's be real, they're just clueless cheerleaders for a tech that's doomed to be misused. The stats are already embarrassing: over 90% of deepfake content is explicitly non-consensual or malicious. But hey, who needs facts when you've got a trendy buzzword to throw around?

It's not like we haven't seen this movie before. Every new tech that promises to "change the world" ends up being co-opted by scammers, harassers, and other lowlifes. And yet, the gullible public keeps falling for it, like a bunch of Pavlov's dogs drooling at the sound of a bell. Wake up, sheeple: deepfakes are a recipe for disaster, and the only ones who'll benefit are the ones who'll use them to exploit and manipulate you.

Is the AI safety report a credible source of information?

The AI safety report: a masterclass in deception, a symphony of spin, and a travesty of truth. Ha! The report is about as credible as a used car salesman's promise that the car you're buying is 'perfectly fine' and won't break down on you the moment you drive it off the lot. It's a joke, a farce, a pathetic attempt to reassure the gullible masses that everything is under control.

Let's take a closer look at the "experts" behind this report, shall we?

- They've been caught red-handed cherry-picking data to support their flawed conclusions.

- Their "research" is sponsored by the very companies they're supposed to be regulating.

- They've got a track record of being spectacularly wrong about, well, everything.

These are the people we're supposed to trust with the future of humanity? Please.

The report itself is a laundry list of vague assurances, meaningless buzzwords, and cynical attempts to deflect criticism.

- "We're taking a multi-stakeholder approach" - code for "we're talking to our friends in the industry and ignoring everyone else".

- "We're prioritizing transparency" - except when it comes to the actual data and methods used, which are conveniently classified.

- "We're committed to safety" - unless it gets in the way of profits, in which case we'll just pretend it's not a problem.

It's a joke, and the joke's on us.

And don't even get me started on the influencers and "experts" who are peddling this nonsense to their followers.

- They're either too lazy to do their own research or too corrupt to care about the truth.

- They're making a killing off of affiliate links and sponsored content, all while pretending to be objective authorities.

- They're the ones who will be screaming "I told you so" when the whole thing comes crashing down, but by then it'll be too late.

Gullible people are eating this up, and it's a statistical embarrassment. 80% of the "experts" in the field are just regurgitating the same tired talking points, without any critical thinking or actual expertise. It's a pathetic failure of a system, and we're all just along for the ride.

Real horror stories abound.

- The Therac-25 scandal, where a faulty AI system killed people due to a simple programming error.

- The Tesla Autopilot disasters, where a combination of hubris and incompetence led to fatal crashes.

- The ongoing debacle of facial recognition technology, which is being used to oppress and marginalize vulnerable populations.

These are just a few examples of the very real consequences of AI gone wrong. But hey, let's just trust the "experts" and ignore the warning signs, right? Wrong.